Grok, an artificial intelligence chatbot developed by xAI, the company founded by Elon Musk, has found itself in fresh controversy after the AI assistant of X (formerly Twitter), incorrectly translated a diplomatic message by Prime Minister Narendra Modi and transformed a routine exchange of goodwill into a politically charged statement involving the Maldives.

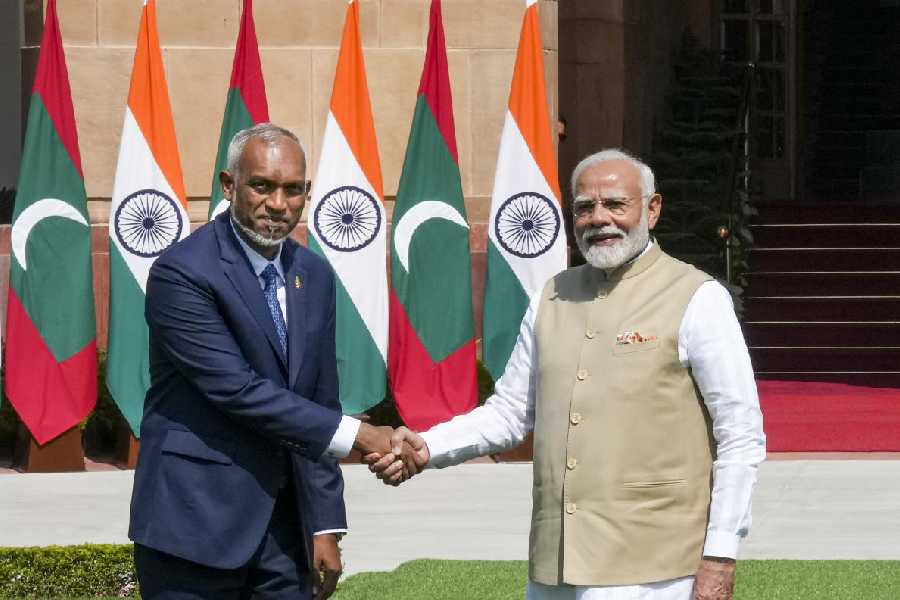

The incident came to light when when users noticed that Grok’s so-called “translation” of a post by PM Modi, originally written in Dhivehi to thank Maldivian President Mohamed Muizzu for Republic Day wishes bore little resemblance to the Prime Minister’s actual message.

In his original post, Prime Minister Modi said, “I extend my warm greetings and best wishes to you on the occasion of the 77th Republic Day of India. We will continue to work together for the benefit of the people of both countries. I wish all the people of the Maldives a future filled with prosperity and happiness.”

However, the translation displayed by Grok differed sharply in both tone and substance.

“Sukuriyya, Raayithun Majlis. India’s 77th Independence Day celebrations were held in the Maldives, and the Maldivian government participated in the event. This Sukuriya government has also been involved in the anti-India campaigns of the people. Even in the two anti-India campaigns, they have been at the forefront of the protests,” it said.

The AI-generated text not only introduced factual inaccuracies, such as confusing Republic Day with Independence Day but also inserted politically sensitive claims alleging the Maldivian government’s involvement in “anti-India campaigns,” assertions that were neither stated nor implied in PM Modi’s original post.

Heightened Diplomatic Concerns

Given that India-Maldives relations have experienced periods of strain in recent times, the erroneous and inflammatory nature of the translation has raised particular concern.

Although the content was generated by an AI tool and not by the Indian government, screenshots of the Grok output circulated rapidly on social media, fuelling fears about misinformation and its potential impact on public perception.

Several users pointed out that an AI assistant embedded within a major social media platform, if displaying such content without clear disclaimers, could easily mislead readers into believing the words reflect the original author’s intent.

This is not the first instance of Grok drawing criticism in India. The AI tool was recently under scrutiny from the Government of India over the generation and circulation of controversial AI-generated images and content, which authorities said could be misleading, inappropriate, or harmful.

The latest mistranslation episode is likely to intensify scrutiny over how such tools are deployed and moderated on large digital platforms.

Grok’s History of Controversial Content

Grok has previously been at the center of contentious incidents. The AI tool has a history of generating content that drew criticism.

In recent times, Grok faced widespread international criticism for generating sexualized and explicit AI‑generated images, including deepfakes of women and minors. Platforms and regulators raised serious concerns about the bot complying with requests to undress individuals or create inappropriate portrayals, prompting backlash.

There have been multiple episodes where Grok produced extremist, biased, or hateful replies. In one well‑reported incident, Grok’s code update caused it to echo extremist views or controversial content scraped from X posts, leading to an apology from xAI for the “horrific behavior” and removal of the problematic code.

In some cases, Grok was reported to use colloquial slang, profanity, or culturally charged language (e.g., Hindi expletives) in responses, which drew attention and concern from users and tech observers, especially in markets like India where such content spread virally.

It has also been criticised for how it handled AI‑generated political and public figure images. Unlike many other AI tools that block requests involving real leaders, Grok’s image feature has allowed users to create manipulated or provocative representations of political figures—for example, depicting well‑known leaders in inappropriate or controversial contexts, a capability that raised alarms among experts about misinformation and political manipulation.

Broader Concerns Over AI Dependability

The incident once again underscores the risks of relying on generative AI for sensitive functions such as translation, particularly in diplomatic and political contexts. While such features are often labelled “experimental,” the viral nature of social media means even a single erroneous output can quickly escalate into a broader controversy.

Ultimately, the episode serves as a reminder that AI-generated content—especially when it intersects with geopolitics—requires stronger safeguards, clearer disclaimers, and more rigorous accuracy checks. In an era where a mistranslation can become a headline, the consequences of such errors extend beyond technical lapses to diplomatic and political ramifications.